Menu

Table of contents

This blog tells you where to find this report. And how to read the information.

What is crawling?

When we talk about website crawling, we mean that Google, or another search engine, explores the website. The search engine's bots - also called crawlers - look at the website.

We compare this to a spider crawling through a web (site). The crawler completely searches the website to index the content. When someone searches on a certain term, the most relevant pages are chosen - from all indexed pages with that term - and then displayed to the visitor.

Is your website not crawling properly? Then "your pages won't be indexed properly either. And therefore, a website that can't be crawled properly is also less findable in search engines.

The role of crawl statistics within SEO: Crawling vs. Indexing

The crawl statistics report from Google Search Console offers you a lot of insight into how Google crawls your website. For example, you can see at a glance what the status codes are. In other words, the information sent by the server to the browser about the requested page. And so you also know which pages have server errors and/or cannot be found.

But before we dive into crawl statistics, let's explain to you the difference between crawling and indexing

Crawling

During crawling, Google's bot passes by the sitemap and by all URLs that have already been crawled once by Google. The crawler follows all external links on the pages, checking for new pages and changes in information.

Index

Every indexable page the crawler encounters sends the crawler to Google Index. Google analyzes the content on that page to understand what the page is about.

Thus, URLs are first discovered during crawling and then interpreted during indexing.

What are crawl statistics?

Google Search Console's crawl statistics give you a clearer picture of how the Googlebot crawls your website. For example, you can see which Googlebot crawled the website, what the status codes are for the pages on your website and which file types were crawled.

Or in other words, the crawl statistics show a wealth of information and data. We love that. And so do you - because you can use them to improve the technology of your website.

Where can you find crawl statistics in Google Search Console?

The crawl statistics are very easy to find in Google Search Console. Log in, choose the domain you want to see the crawl statistics of and go to settings.

That's where you'll find the crawl report. It can be that easy!

Open the report and keep this blog handy digitally to see exactly what you can find here.

The different crawl requests at a glance

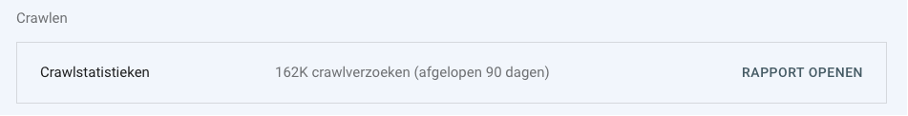

In the crawl report you will find several pieces of data. At the top of the report we see the total number of crawl requests during the selected period, the total number of bytes downloaded and the average server response time.

Below that you will find the status of the host. Any crawl issues that came up while crawling the website are listed here. For example, if there are problems with the robots.txt or with the sitemap.xml, you will see that here.

What components do crawl requests consist of

What makes our SEO heart beat faster - drum roll - are the breakdowns of crawl requests.

In fact, in the crawl report you will find all crawl requests broken down:

- By status code

- By file type

- Per goal

- Per Googlebot

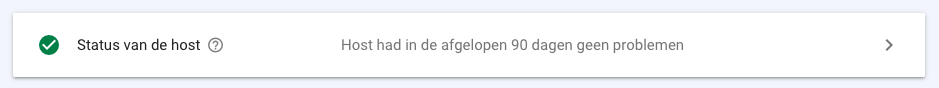

By status code

In this breakdown, you can see exactly what status codes the Googlebot ran into while crawling. Do you see a lot of 301's, 304's or 404's here? Then just click through on the code and immediately see which URLs have that status code.

Most common status codes:

- 200: the page is well found

- 301: the page was found with a redirect

- 304: a saved version of the page was found

- 404: the page cannot be found

For example, do you see a lot of 404s reflected in the crawl report? Then deal with that to prevent the Google bots from putting crawl budget into this over and over again.

Crawlbudget

In fact, every day Google crawls a number of pages on your website. That number is called the crawl budget and depends on, for example, the authority of your website. If you have as few false status codes as possible in the crawl report, then the crawl budget is mainly spent on 200 pages. And that's what you want, of course!

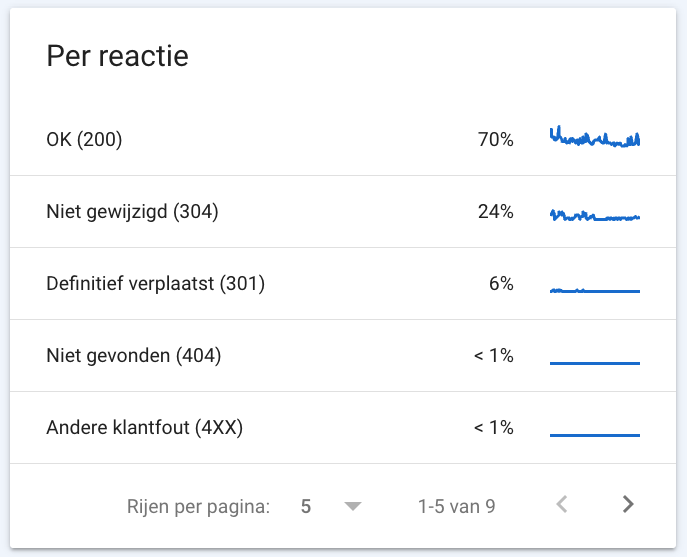

By file type

Under this breakdown, you'll find all the types of files Google has downloaded. Does your website have a slow response time? Then you can see here which sources were crawled. For example, does Google read small images? Then you can exclude those from the crawl.

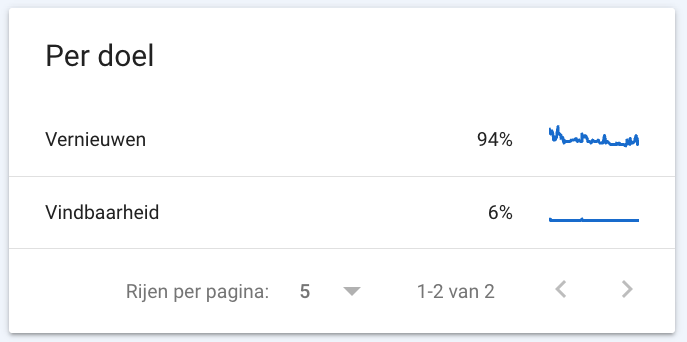

Per goal

Under the crawl report you can also find what the ratio is between re-rated URLs and pre-first-crawl URLs. Under 'Refresh' you will find all URLs that have been previously discovered by the Googlebot and under 'Findability' you will find all URLs found for the first time by the crawler.

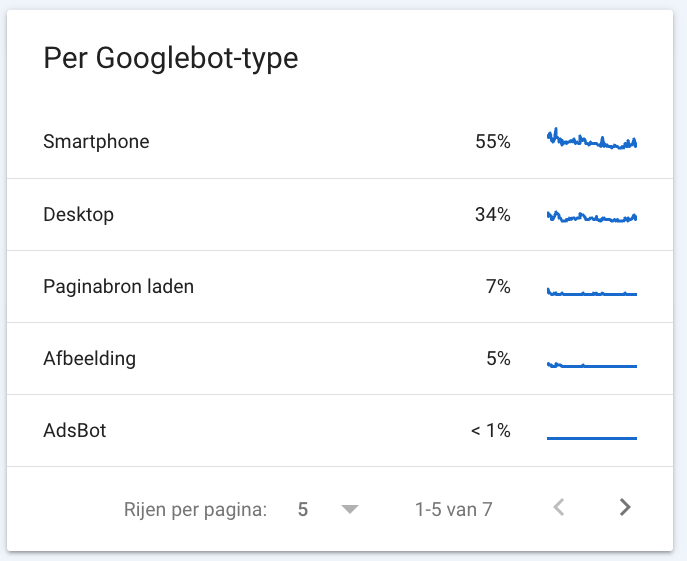

Per Googlebot

Finally, the crawl requests are broken down by Googlebot. Google uses different bots with different user-agents that crawl for different reasons and also exhibit different behaviors. For example, consider a smartphone-bot, a dekstop-bot, an image-bot, an ads-bot or a store-bot.

Different crawlers simulate visitors via the visitor's user-agent type. Most of your crawl requests should come from your primary crawler. That is always smartphone or desktop.

Concrete tips

How do you get the most out of crawl statistics? We'd love to explain that to you. Check out our tips below:

Steer the crawl budget by resolving 404s and server errors

Is a lot of crawl budget spent on pages with status codes you'd rather not see, such as 404s or 304s? Or is a lot of crawl budget being spent on pages with server errors? That's a waste! Because that leaves less - or even no - budget for other pages. So fix the errors you encounter to make sure your crawl budget is used as efficiently as possible.

Make sure your website is easy for crawlers to read

Although Google's bots come along completely on their own, you can make things a lot easier for them! And that's important. Because the easier your website can be read by the bots, the more findable your website will be.

Help the crawlers by excluding pages in the robots.txt, submitting new pages manually in Google Search Console and making sure your sitemap is in order.

Make sure your website speed is high

Is your website loading slowly? Then a crawler takes longer to move through the pages of the website. And that's a shame, because then the web the crawler makes is thus smaller than when the website loads quickly.

So check your website's load time with Google PageSpeed Insights. Is the loading time bad? Then apply the tips PageSpeed Insights gives you!

What is crawling?

Crawling means that a search engine's bots explore a Web site. Search engine bots - also known as crawlers - examine the website. When someone searches for a certain term, the most relevant pages are chosen - from all indexed pages with that term - and then shown to the visitor

What are crawl statistics?

In Google Search Console's crawl statistics, you can see all the data that Google has collected while crawling. The crawl statistics show exactly which URLs were crawled, what the status code of those URLs is and what file types were crawled. This gives you more insight into how your website is being read by Google.

Where can you find crawl statistics in Google Search Console?

You can find the crawl statistics very easily in Search Console. Go to settings and open the report under crawling. There you will find all the information Google collected during crawling.

Written by: Nicole de Boer

Nicole is Teamlead CRO at OMA. She spices up your website with fine SEO content and chops up your competition. Delicious and healthy all in one.